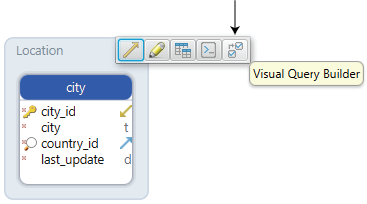

Visual Query Builder

The Visual Query Builder can help to create SQL queries visually and execute them. This is helpful for users with less SQL knowledge. The Visual Query Builder opens inside diagrams, is saved inside the model file, and can be reopened from the Data Tools menu.

How to Start the Query Editor

Start the Query Editor by clicking the table header. Alternatively, start an empty editor and drag & drop a table from the diagram. Alternatively, start an empty editor and drag & drop a table from the diagram.

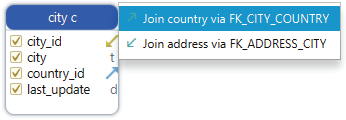

Join Further Tables to the Query

You may join further tables to the query using foreign keys or virtual foreign keys. Virtual foreign keys can be created by column drag & drop in the diagram and are saved to the model file.

Add further tables to the diagram by clicking the small arrow icon near columns. Clicking the label on the foreign key line, you can switch to different join types: INNER JOIN, LEFT JOIN, EXISTS.

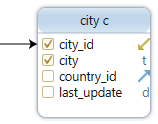

Choose the Columns to Select

Choose the columns to select by ticking the column checkboxes.

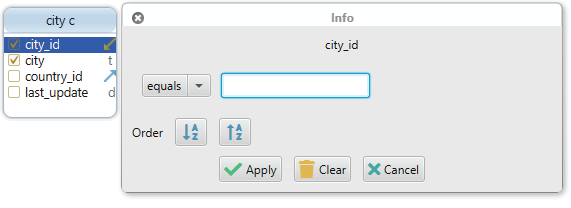

Filter the Data

Data can be filtered by right-clicking any of the columns and choosing the 'Filter' option.

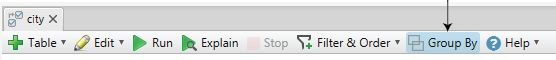

Use Group By

Select the toggle button in the query builder menu to make the query use the GROUP BY clause. Then you can make use of aggregate functions by right-clicking any of the columns and choose Aggregate: MIN, MAX, SUM, AVG...

Save Result to File

Similar to the SQL Editor, the result from the Result Pane can be saved to the file.

Dropping Query Editor

The Query Editor can be dropped by right-clicking the editor in the structure tree, under the 'Diagrams'.

When closing an Query Editor, DbSchema will ask if you wish to preserve the editor in the design model ( close the editor but keep a copy in the design model, so it can be reopen at any time ), or close and drop from the design model as well.