DbSchemaCLI Universal Command Line Client

DbSchemaCLI is a command-line client, which can be used to start the Floating License Server and as database client for multiple databases simultaneously. DbSchemaCLI is part of the standard installation kit.

Features

Floating License Server

The Floating License Server lets multiple people in your team use DbSchema, but ensures that only a limited number are using it at the same time, based on how many floating licenses you’ve purchased. The server is part of DbSchemaCLI, a command-prompt tool included in the DbSchema installation package. There are two ways of running the Floating License Server:

1. Run FLS from Docker

To start the Floating License Server from Docker, please use the official Docker image at Docker Hub.

2. Start FLS manually from DbSchemaCLI

The Floating License Server can be started from DbSchemaCLI, which is part of the standard installation kit.

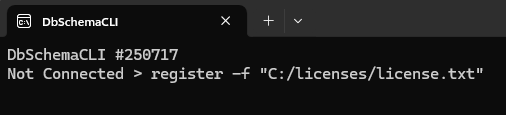

- Open a terminal or command prompt and start DbSchemaCLI

-

Load the floating license from a file using

register -f "path-to-file"

- Start the license server using

license server -s -

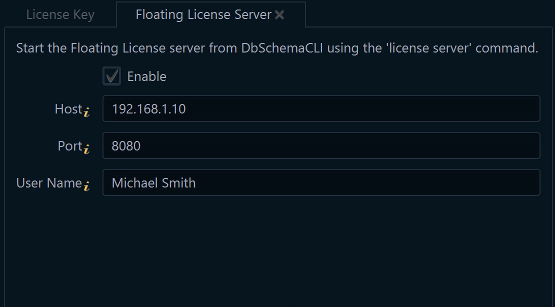

On each client machine, open DbSchema, go to Help → Registration Dialog → Floating License Server, and enter the server host and port

- To monitor the server, use

license server -l(list active users) andlicense server -i(server status) - To run to as Docker in Kubernetes, you need to configure liveness and readiness probes:

healthChecks: livenessProbe: exec: command: - true initialDelaySeconds: 30 failureThreshold: 10 periodSeconds: 60 successThreshold: 1 timeoutSeconds: 60 readinessProbe: exec: command: - true initialDelaySeconds: 30 failureThreshold: 10 periodSeconds: 60 successThreshold: 1 timeoutSeconds: 60

On the client computer, configure the Floating License Server in the Help → Registration Dialog → Floating License tab.

To automatically restart the Floating License Server on Windows, save the following commands in a file (e.g. start-server.bat) and add the file to the Windows Task Scheduler:

DbSchemaCLI > license server -s

DbSchemaCLI > sleepThis will ensure the license server starts automatically each time the system boots.

Optionally, you can set the license server host name and port as environment variables on the client computer:

DBSCHEMA_FLS_HOST and DBSCHEMA_FLS_PORT.

Connect to the Database

Connect to the database using:

connection mysql -dbms MySql -h localhost -u dbschema -p dbschema12 -d sakila

download driver {RDBMS}Before creating database connections, you have to download the JDBC drivers for the databases we are working with. For this execute in DbSchemaCLI the command:

download driver {RDBMS}The 'rdbms' is one of database names from this list, case sensitive.

Example: download driver PostgreSQL or download driver MySql. The drivers will be downloaded in C:\Users\YourUser\.DbSchema\drivers. Alternatively, download the JDBC driver .jar files by yourself and place them in the /home/users/.DbSchema/drivers/<rdbms>

In the User Home Directory (C:\Users\YourUser or /home/YourUser ) DbSchemaCLI is creating a folder .DbSchema/cli. with a file init.sql. DbSchemaCLI is executing this script at startup. So is easy to define the database connections right in this file.

- register driver <rdbms> <driverClass> <URL> <"default_parameters">

- connection <name> <rdbms> <"parameters">

Example:

register driver PostgreSql org.postgresql.Driver jdbc:postgresql://{HOST}:{PORT}/{DB} "port=5432"

connection pos1 PostgreSql "user=postgres password=secret host=localhost db=sakila"

register driver Oracle oracle.jdbc.OracleDriver jdbc:oracle:thin:@{HOST}:{PORT}:{DB} "port=5432"

connection ora1 Oracle "user='sys as sysdba' password=secret host=188.21.11.1 db=prod"The register driver command defines for each RDBMS (MySql,Postgres,Oracle...) the:

- the Java driver class

- the JDBC URL with tags <...>.

- default values for same tags, like the port

This way of defining connections is similar to PostgreSQL configurations. In the advanced section, you will find examples of how to create a new connection from an existing one, by changing one connection parameter. We call this 'derive connection'.

The connection command defines the connection by setting the real values for each URL parameter. The RDBMS from the register driver command should match the RDBMS from the connection command.

The complete list of known drivers is the same as for DbSchema.

Execute Queries on Multiple Databases

This operation assumes that the queried tables exists on each of the connected databases. The connections 'pos1' and 'pos2' should be already defined.

connect pos1,pos2

SELECT * FROM sakila.city;Create connection groups.

connection group production pos1,pos2

connect production

SELECT * FROM sakila.city;Spool the result to a file.

connect production exclude my3

spool /tmp/result.csv

SELECT * FROM sakila.city;

spool offUse Variables

Define DbSchemaCLI variables using the command 'vset'. Few variables are system variables. If the system variable replace_variables=true, all other variables will be replaced in the SQL queries. Use help to list all system variables. Execute show variables to list the defined variables.

vset cli.spool.separator=|

vset format.date=YYYY-MM-dd'T'hh:mm:ss'Z'

vset format.timestamp=YYYY-MM-dd'T'hh:mm:ss'Z'

vset cli.settings.replace_variables=true

vset myid=6230

connect db4

execute export.sqlWhere the export.sql script is :

spool /tmp/export_&myid.csv

select * from some_table where id=&myid;Transfer Data Between Databases

The next command transfers data from the specified database(s) into the currently connected database. The transfer command can use a query to read the data. The column names should match between the source and the target databases. There can be multiple source databases.

connect pos1

transfer into_table from pos2,pos3 using

select id, firstname, lastname from persons;The transfer is running on a configurable number of threads.

vset cli.settings.transfer_threads=4

Execute 'help' to see all configurable parameters.

There is one reader thread and multiple writer threads. Each writer thread is writing a bunch of rows, then it commits the transaction. The writer threads are writing between 500 and 100.000 records, depending on the number of transferred columns ( 500 for more than 50 columns, 100.000 for 1 column ).

Write Groovy Scripts

Many database tasks require more logic than simple SQLs. We do this using Groovy, a pure Java scripting language with closures. Groovy code can be started with the keyword groovy and ends with the first line with only a slash '/'.

Everything which works in Java is working in Groovy, with these improvements:

- Multiline Strings (started and end with triple quotes ): """...."""

- ${...} expressions are evaluated

- Closures sql.eachRow(...){r->...} are similar with Java Lambda

connect db1

groovy

int days = 5

sql.eachRow( """

SELECT personid, firstname, lastname FROM persons p WHERE p.created < sysdate-${days} AND

EXISTS ( SELECT 1 FROM sport_club s WHERE s.personid=p.personid )

ORDER BY personid ASC

""".toString() ) { r ->

println "${r.personid} ${r.firstname} ${r.lastname}"

}

/Groovy scripts receive two objects :

- 'sql' is the database connection as an SQL object.

- 'connector' is the DbSchemaCLI object for a defined connection

Chunk Database Updates and Deletes

Updating or deleting large amounts of data may require splitting the operation into smaller chunks. This because the large operation may cause disk or memory issues and may lead to database locks.

- The 'update' example is using a nested loop with a Postgres.setResultSetHoldability.

- The 'delete' example is loading id values into an ArrayList.

import java.sql.ResultSet

sql.setResultSetHoldability( ResultSet.HOLD_CURSORS_OVER_COMMIT)

def i = 0

sql.eachRow( "SELECT personid FROM persons".toString() ){ r ->

sql.execute("UPDATE persons SET ocupation='t' WHERE personid=?".toString(), [ r.personid ])

i++

if ( i > 1000 ){

sql.commit()

i = 0

print "."

}

}

sql.commit()Here is a deletion example:

List ids = new ArrayList()

sql.eachRow( 'SELECT id FROM large_table' ) { m ->

ids.add( m.id )

}

int cnt = 0;

for ( int id : ids ) {

sql.execute('DELETE FROM large_table WHERE id=?', [id] )

cnt++

if ( cnt > 1000) {

print '.'

sql.commit()

cnt = 0;

}

}Create Custom Commands

Here we create a Postgres custom command to compute the disk usage. The command can be executed on multiple databases. Save this script into a file diskUsage.groovy, and use 'register command diskUsage.groovy as disk usage'.def cli = new CliBuilder(usage:'disk usage ')

cli.h(longOpt:'help', 'Show usage information and quit')

def options = cli.parse(args)

if (options.'help') {

cli.usage()

return

}

sql.execute("DROP TABLE IF EXISTS dbschema_disk_usage")

sql.execute("DROP TABLE IF EXISTS dbschema_disk_usage_logs")

sql.execute("CREATE TABLE dbschema_disk_usage( filesystem text, blocks bigint, used bigint, free bigint, percent text, mount_point text )")

sql.execute("CREATE TABLE dbschema_disk_usage_logs( blocks bigint, folder text )")

sql.execute("COPY dbschema_disk_usage FROM PROGRAM 'df -k | sed \"s/ */,/g\"' WITH ( FORMAT CSV, HEADER ) ")

sql.execute("UPDATE dbschema_disk_usage SET used=used/(1024*1024), free=free/(1024*1024), blocks=blocks/(1024*1024)")

String dbid = sql.firstRow( "SELECT current_database() as dbid").dbid

sql.execute("COPY dbschema_disk_usage_logs FROM PROGRAM 'du -m -s /data/${dbid}/pgsystem/pg_log | sed \"s/\\s\\s*/,/g\"' WITH ( FORMAT CSV ) ".toString())

sql.execute("COPY dbschema_disk_usage_logs FROM PROGRAM 'du -m -s /data/${dbid}/jobsystem/pg_log | sed \"s/\\s\\s*/,/g\"' WITH ( FORMAT CSV ) ".toString())

int logBlocks = sql.firstRow( "SELECT sum(blocks) as usage FROM dbschema_disk_usage_logs").usage

sql.eachRow( "SELECT * FROM dbschema_disk_usage"){ r->

if ( r.filesystem.startsWith( '/dev/mapper') ){

int freePercent = Math.round(r.free*100/(r.blocks))

String status = ( r.free < 30 && r.blocks > 1500 ) ? 'Very Low' : (( r.free < 50 && r.blocks > 1000) || r.free < 30 ) ? 'Low' : "Ok"

String logStatus = logBlocks > 1024 ? String.format( 'pg_logs %dM', logBlocks ) : ''

println String.format( '%10s Free %3d%% ( %dG out of %dG ) %s', status, freePercent, r.free, r.blocks, logStatus )

}

}

sql.execute("DROP TABLE dbschema_disk_usage_logs")

sql.execute("DROP TABLE dbschema_disk_usage")Create Connections Using Suffixes

There are cases when you have to manage large database clusters where you have primaries and standby databases. I used to manage a cluster where the primary database hostnames were like 'pos1.db', 'pos2.db', ... and standbys were 'pos1.sbydb', 'pos2.sbydb'...

For this I let this script to create the standby database connections:

groovy

import com.wisecoders.dbs.cli.connection.*

for ( int i=1;i <130; i++){

ConnectionFactory.createConnector( "pos${i}", "postgres", "user=postgres host=pos${i}.db db=sakila${i}" )

}

ConnectionFactory.addSuffix( new Suffix("sby"){

public Connector create( Connector con ){

String host = con.getHost();

if ( host.endsWith(".db") ) return duplicate( con, "host=" + host.replaceFirst(/\.db/, /.sbydb/))

return null

}

})The script will receive a connection. If the connection is matching the name .db, will duplicate the Connector by creating a new parameters string, where .db is replaced with .sbydb.

Write Cronjob Scripts

DbSchemaCLI you can execute scheduled scripts regularly. If a script is failing, an email is sent to the configured emails.

vset cli.mail.server.host=internal.mailserver

vset cli.mail.server.user=admin

vset cli.mail.server.password=secret

vset cli.mail.from=Admin

vset [email protected]

vset cli.cron.folder=/dbschemacli/cronscriptsOn the machine where DbSchemaCLI is running, add in /etc/crontab this line, fixing fist the path to DbSchemaCLI

01 * * * * /usr/local/bin/dbschemacli -cronAnd in /dbchemacli/cronscripts/ save the script files. Example:

sales_report.schedule_hour(13)_dayOfWeek(1).sql

usage_report.schedule_minute(each5).sql

load_report.dayOfMonth(1).sqlDbSchemaCLI is checking the file names matching this pattern 'schedule_' followed by 'minute|hour|dayOfWeek|dayOfMonth|dayOfYear|month|year'. After this, a pair of brackets '()' containing one of the following is expected:

- number (1)

- a list of numbers (1,2,5)

- a range (1-10)

- an expression 'each<number>', for example: 'each5'.

If the scripts fails, an email is sent to the database admin. If a file has the '.groovy' extension, the script is executed using Groovy.

Upload Reports on [s]ftp Server

You can upload CSV output files on FTP or SFTP servers. The command is upload [-d] ftpURL fileName. -d states for removing the local file. We are using Apache Commons VFS with support for all protocols. Example:upload sftp://[email protected]/intoFolder report.csvStore Passwords in a Separate File

For many reasons, you may need to store passwords outside the init.sql file, where the database connections are being defined. This would allow saving the init.sql file in GIT without making passwords public. Therefore is possible to store passwords in a separate file ~/.DbSchema/dbshell/pass.txt or ~/.pgpass (for compatibility with PostgreSQL standards). The file format is the same as the PostgreSQL pgpass

hostname:port:database:username:passwordHere we set the password 'secret' for all hosts, port 1521 user 'postgres'.

*:1521:sakila:postgres:secretThe connection should specify password=<pgpass> in the connection parameters to make use of the password from the pass.txt or .pgpass file.

DbSchemaCLI Start Parameters

DbSchemaCLI can be started with the following parameters:

- '-cron' to start in crontab mode, details in the Cronjob Documentation.

- A script path to execute the given script.