Design Better Databases - From Theory to Tools with DbSchema

Originally written in German by Dr. Veikko Krypczyk

Published in PHP Magazin 3/2025

Read the original article here (German)

Database Design: Goals, Challenges, and Tool Usage

Databases remain the "bread and butter" of software development. Especially PHP developers can attest to this, since PHP is predestined for working with databases. Designing database schemas requires extensive knowledge and is often time-consuming. The database must be normalized, the correct keys must be defined, and so on. For these comprehensive and complex tasks, a universal tool like DbSchema can be very helpful.

Real-World Relevance

A colleague recently told me about an investor who, when evaluating a potential investment, included the structure and condition of the company’s databases in their decision. The databases were analyzed for their structure in order to assess the technical state of the company’s software. Whether this carries that much weight in everyday practice is debatable. What is clear, however, is that the long-term viability of enterprise software depends significantly on a well-thought-out and structured database.

Databases are the backbone of modern IT systems. They store, organize, and enable access to large volumes of information used in various applications - from simple websites to complex enterprise systems. A well-planned database structure is crucial for application efficiency, security, and scalability. Design flaws can lead to reduced performance, data inconsistencies, or increased maintenance efforts.

The goal of sound database design is to strike a balance between usability, data security, and performance. This includes clear structures, minimal redundancy, and a clean separation of data - which not only improves data integrity but also makes future changes easier.

This article provides a comprehensive overview of the core aspects of database structures. We begin with the fundamentals of database design, explain normalization principles that help avoid redundancy and ensure data consistency. We then explore table structures and the significance of relational databases, which are among the most widely used models today.

Special attention is given to primary, foreign, and secondary keys, and their role in organizing and linking data. We conclude by introducing a tool that assists with visualizing and analyzing database structures to ease design and maintenance.

This article is aimed at developers, administrators, and anyone interested in building efficient and well-structured databases.

Fundamentals of Database Design

Database design refers to the structured process of creating a database to efficiently store, manage, and process data. The goal is to build a database that:

- Ensures data integrity (accuracy and consistency of stored data),

- Performs well even with growing volumes of data,

- Remains flexible to respond to future requirements,

- Is easy to maintain to support updates and extensions.

Good database design avoids redundancy, reduces potential sources of error, and ensures data is organized in a logical and consistent way. Key principles include:

1. Clarity and Simplicity

A well-structured design should be easy to understand. Tables and relationships must be clearly defined. This facilitates maintenance and collaboration with other developers or administrators.

Example: Instead of storing all information about customers and orders in one table, separate them into distinct tables (normalization).

2. Data Integrity

Ensuring correctness and consistency of data:

- Entity Integrity: Every table must have a unique primary key identifying each row.

- Referential Integrity: Relationships between tables (e.g., customers and orders) must be secured through foreign keys. For example, an order can't exist without a valid customer.

- Domain Integrity: Enforces valid values using data types, constraints, and ranges.

3. Avoid Redundancy

Redundancy means storing the same data multiple times. This can cause inconsistencies and waste storage. Normalization helps minimize redundancy by splitting data into separate tables and clearly defining relationships.

Example: Without normalization, a customer’s name might be repeated in every order. With normalization, the name is stored once in the Customers table.

4. Flexibility and Scalability

A database should adapt to growing data and evolving requirements:

- New columns or tables should be easy to add.

- The system should remain efficient even as data scales.

Example: In an online shop, adding new product categories should be possible without redesigning the entire database.

5. Performance

Good design improves query speed and overall performance:

- Indexing: Speeds up queries on frequently accessed columns.

- Query Optimization: Clean designs enable efficient SQL queries.

- Partitioning: Splits large tables to improve access times.

Example: Partition a large Orders table by year to retrieve data faster for specific time ranges.

Common Steps in Database Design

The common steps in designing a database are summarized in the table below.

| Step | Explanation | Example |

|---|---|---|

| Requirements Analysis | The goal is to understand the application's requirements: What data should be stored? How are the data elements connected? What queries and reports are needed frequently? | For an online store, you need to consider customers, products, orders, and categories. |

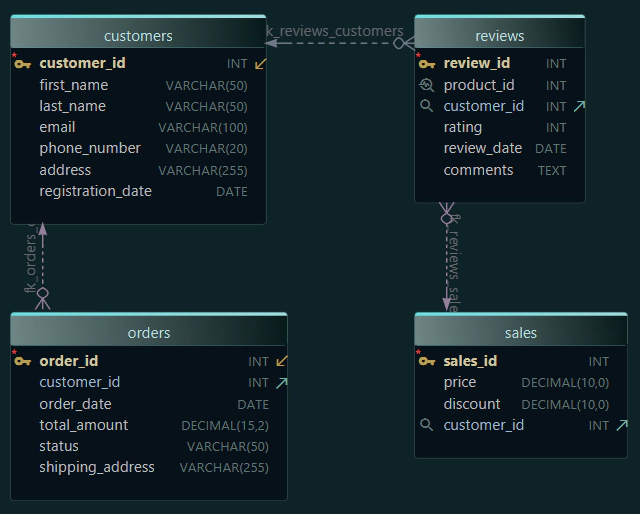

| Conceptual Model | Create an entity-relationship diagram (ERD) that graphically represents entities (e.g., customers, orders) and their relationships. | A customer can place multiple orders (1:n relationship). |

| Logical Model | Translate the ERD into tables and columns. Define primary and foreign keys, as well as constraints. | Customers table: customer_id (PK), name, address, phone. Orders table: order_id (PK), customer_id (FK), date, total_amount. |

| Physical Model | Implement in a specific database system such as MySQL or PostgreSQL. Optimize physical storage through indexes and partitions. | Create SQL tables for orders. Add indexes to frequently queried columns (e.g., customer_id, order_id). |

Summary: A well-designed database forms the foundation of efficient, maintainable systems. It ensures clear structure, minimizes redundancy, guarantees data integrity, and maintains performance even with large data volumes. By applying the described principles and methods, common errors can be avoided and robust database systems can be created.

Normalization

Normalization is a critical step in database design aimed at minimizing redundancy and ensuring data consistency. It involves splitting the database into several smaller, logically connected tables, where each table focuses on a specific subject or entity.

This approach improves data integrity and simplifies maintenance. However, it can also impact query performance, which is why a balance between normalization and performance should be carefully considered.

Normalization is divided into several phases, known as normal forms (NF).

Understanding Normal Forms - 1NF, 2NF, 3NF

First Normal Form (1NF)

A table is in 1NF when:

- All values are atomic (indivisible).

- Each column has a unique data type.

- No repeated groups or arrays exist in a column.

Example before normalization:

| customer_id | name | products |

|---|---|---|

| 1 | Müller | Laptop, Mouse |

| 2 | Schmidt | Keyboard, Monitor |

Here, the products column contains multiple values. This violates 1NF.

After applying 1NF:

| customer_id | name | product |

|---|---|---|

| 1 | Müller | Laptop |

| 1 | Müller | Mouse |

| 2 | Schmidt | Keyboard |

| 2 | Schmidt | Monitor |

This structure ensures atomic values in each field.

Second Normal Form (2NF)

A table is in 2NF when:

- It is already in 1NF.

- All non-key attributes are fully dependent on the whole primary key (no partial dependencies).

Example before normalization:

| order_id | customer_name | product | price |

|---|---|---|---|

| 101 | Müller | Laptop | 1000 |

| 102 | Schmidt | Mouse | 20 |

Here, customer_name is dependent on order_id, but also logically belongs to a Customers table.

Split into two tables:

Customers

| customer_id | customer_name |

|---|---|

| 1 | Müller |

| 2 | Schmidt |

Orders

| order_id | customer_id | product | price |

|---|---|---|---|

| 101 | 1 | Laptop | 1000 |

| 102 | 2 | Mouse | 20 |

Third Normal Form (3NF)

A table is in 3NF when:

- It is in 2NF.

- No non-key attributes depend on other non-key attributes (no transitive dependencies).

Example before normalization:

| order_id | customer_id | customer_address | product |

|---|---|---|---|

| 101 | 1 | Berlin | Laptop |

| 102 | 2 | Hamburg | Mouse |

customer_address is dependent on customer_id, not on order_id.

Split into two tables:

Customers

| customer_id | customer_address |

|---|---|

| 1 | Berlin |

| 2 | Hamburg |

Orders

| order_id | customer_id | product |

|---|---|---|

| 101 | 1 | Laptop |

| 102 | 2 | Mouse |

Keys in Databases - Primary, Foreign, and Secondary Keys

Primary Key

A primary key is a column (or a combination of columns) that uniquely identifies each row in a table. It must be:

- Unique (no duplicates allowed)

- Not NULL (every row must have a value)

Example - Customers table:

| customer_id (PK) | name | address |

|---|---|---|

| 1 | Müller | Berlin |

| 2 | Schmidt | Hamburg |

SQL Definition:

CREATE TABLE Customers (

customer_id INT PRIMARY KEY,

name VARCHAR(255),

address VARCHAR(255)

);

Foreign Key

A foreign key is a column that links one table to the primary key of another, enforcing referential integrity.

Example - Orders table:

| order_id | customer_id (FK) | date |

|---|---|---|

| 101 | 1 | 2025-01-01 |

| 102 | 2 | 2025-01-05 |

SQL Definition:

CREATE TABLE Orders (

order_id INT PRIMARY KEY,

customer_id INT,

date DATE,

FOREIGN KEY (customer_id) REFERENCES Customers(customer_id)

);

Secondary Key

A secondary key is any column (or group of columns) used to optimize search and indexing but not necessarily unique.

Example - phone in Customers table:

| customer_id | name | phone |

|---|---|---|

| 1 | Müller | 0723-731-652 |

| 2 | Schmidt | 0723-731-631 |

SQL Definition:

CREATE INDEX idx_phone ON Customers(phone);

Tools for Visualizing, Maintaining, and Analyzing Database Structures

Visualizing and analyzing database structures are essential tasks for understanding, documenting, and managing complex systems. Specialized tools allow developers and DBAs to create diagrams, synchronize schemas, document models, and optimize performance.

In large-scale environments, databases may contain hundreds of tables and relationships. Without proper tools, managing them becomes difficult and error-prone. Common challenges include query analysis, schema version tracking, and performance tuning.

A key requirement is the ability to work collaboratively. Developers often make schema changes locally and must merge them with a central model. Visual tools streamline this, prevent conflicts, and enhance productivity.

Why Tools Matter

- Visual diagrams clarify relationships and structures.

- Schema synchronization ensures consistent environments.

- HTML5 documentation helps onboard new team members.

- Automated processes save time and reduce errors.

DbSchema: A Comprehensive Database Design Tool

DbSchema is a universal visual database tool that supports over 80 databases, including MySQL, PostgreSQL, SQL Server, and MongoDB. It is available on Windows, macOS, and Linux.

Key Features

- Visual Schema Design: Drag-and-drop tables in ER diagrams.

- Team Collaboration via Git: Save designs as XML files and track changes in Git.

- Schema Synchronization: Compare model vs. database and generate migration scripts.

- Interactive HTML5 Documentation: Export schema diagrams with mouseover comments.

- Data Editor: Browse and edit related data across multiple tables.

- Visual Query Builder: Build SQL queries without writing code.

- Data Generator: Populate tables with test data based on patterns or regex.

- Automation Scripts: Run Groovy scripts for tasks like backups and documentation.

Collaboration & Git Integration

DbSchema stores the database model as an XML file, making it ideal for version control with Git:

- Clone a central repository.

- Commit & Push local changes.

- Pull updates from the team.

This method allows offline work and full version history without relying on cloud storage.

Security & Compliance

DbSchema supports self-hosted workflows and complies with ISO/IEC 27001 standards. Companies handling sensitive data can use DbSchema without exposing models to third-party services.

HTML5 Documentation

DbSchema can export the entire schema to an interactive HTML5 format with:

- Table diagrams

- Comments

- Tooltip descriptions

This is useful for internal wikis and onboarding developers quickly.

Relational Data Explorer

The visual data explorer allows:

- Cross-table navigation using real or virtual foreign keys

- Editing linked rows without writing SQL

- Understanding complex data relationships easily

Automation with Groovy Scripts

You can automate recurring tasks like:

- Nightly schema synchronization

- Model-database comparison

- Documentation export

This reduces manual work and ensures consistency across environments.

Conclusion and Outlook

A carefully planned and implemented database design forms the foundation of powerful and maintainable IT systems. It ensures clear structures, minimizes redundancies, and guarantees data integrity.

Modern tools like DbSchema support developers and administrators not only in planning and visualizing schemas, but also in team collaboration and automating recurring tasks. Especially in complex systems with hundreds of tables and relationships, these tools offer significant advantages in efficiency, clarity, and long-term maintainability.

With the growing adoption of cloud databases and NoSQL technologies, new challenges are emerging - particularly around distributed systems and Big Data. Future-ready database design may involve hybrid strategies that combine relational and non-relational approaches.

As development ecosystems evolve, investing time into thoughtful schema design - supported by powerful tools - remains a critical success factor for any software project.

References

-

This article is an English translation of:

"Erfolgsfaktor Datenbankdesign" by Dr. Veikko Krypczyk,

originally published in PHP Magazin 3/2025.

Read the original article (in German) -

All rights remain with the original author and publisher.

This version is provided for educational and informational purposes. -

Author’s website: https://larinet.com